Seeking a new direction – the future of the multiple choice question

Where does the multiple choice question go from here?

Multiple choice tests have been a staple of learner evaluation for a century. Even as technology has evolved and student needs have changed, the MCQ test has basically remained the same.

One advocate for change is Professor Geoffrey Crisp of the University of New South Wales who has written extensively on the topic. I contacted him to hear his thoughts on how the multiple choice question is used, and whether he felt it could be reinvented for the digital age.

Multiple options for multiple needs

Learnosity

The MCQ divides opinion. Some believe it can measure knowledge quickly, easily and cheaply, while others feel it fails to really evaluate a learner’s deeper thinking skills. As someone with an academic background in e-assessment, do you feel the MCQ has had its day? Or have test designers simply failed to adapt the MCQ to meet changing learner needs?

Prof. GC

The format for an MCQ is something familiar to both teachers and students. In this sense it has some advantages as the format does not confuse users or get in the way of responding to the question. The main issue with MCQs is that they have traditionally been used for recall rather than analyzing or evaluating. So the trick is to use the simple format of MCQs but have more sophisticated questions.

Now the issue here is that most teachers try to do this by having very long, complicated stems and options which often confuse the student who takes a long time to actually work out what concept is being assessed in the question.

The main issue with MCQs is that they've been used for recall rather than evaluation. Share on XSome options here are to embed a link in the MCQ to a digital tool or resource that students must use to solve the problem. This could be a simulation, a video or audio file, a 3D image can be manipulated, or Excel spreadsheets with macros. This way students need to understand the concepts to be able to use the tool in order to answer the question. Of course, students can still guess, but the question is not based on recall.

The other approach to asking more sophisticated MCQ questions is to have linked MCQs that require students to respond to the first part of the MCQ by choosing the option they think is most appropriate. The second part of the MCQ is the rationale based on the concept being assessed.

Learnosity

I recently came across a piece in the Washington Post by Terry Heick, an education blogger, in which he raised some very interesting points about how technology has changed how people receive and even experience information. It’s worth quoting a section from the piece:

“In the 21st century, change is happening at an incredible pace. Access to information is disrupting traditional processes and their related mechanisms … Printed texts have gone from being the final word to simply one step in an endless chain of making information public. Texts are now merged with moving images, hyperlinked, designed to be absorbed into social media habits, and endlessly fluid. From an essay to a blog post, an annotated YouTube video to a STEAM-based video game, a tweet to digital poetry, the seeking and sharing of ideas is an elegant kind of chaos.”

This is something that seems to chime well with the thoughts you previously expressed in your own research:

“Rather than attempt to mimic paper-based assessments, e-assessments should offer authentic problems that require students to manipulate authentic tools in order to respond to a question.”

Because information gathering is now such a fluid experience, do you feel that there are other question types that educators should be leveraging to help digital assessment better reflect information’s fluidity and how modern learners are responding to it?

Prof. GC

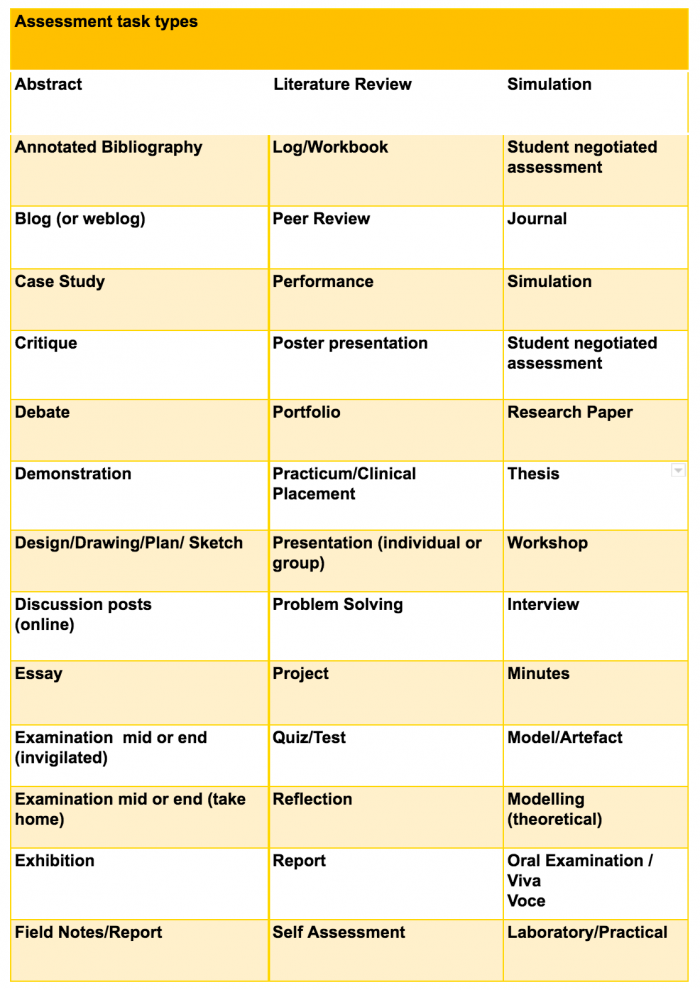

We definitely should be using a wider variety of assessment task types. I have summarized this at the end of the document from a table I have used before when encouraging teachers to think about the variety of their assessment task types.

Not all of these assessment types are suitable for online delivery but many can be successfully used either face to face or online. The main issue is to change the task so that it facilitates engagement with the key concepts and ideas of the discipline and uses the discipline tools rather than an artificial environment that we tend to create because it is administratively easier.

Simulations, scenario-based activities, and role plays are powerful formats for students to engage more meaningfully with key concepts and ideas. Not all problems have easy solutions and we need to move students from thinking that all problems have a “correct” answer to realizing that there are often multiple options for solving a problem. The main issue is to understand the consequences of the various options. Students should be encouraged to justify their solution to a problem based on multiple criteria.

Interactivity, interoperability, and the future of learning

Learnosity

You’ve long been an advocate of adding interactive elements to MCQs such as browser plugins, Java applets, Quicktime VR, and so on. Technology now affords greater potential for interaction in assessment than ever before.The team at Learnosity recently held a hack day in which some of the guys developed a basic Virtual Reality-based MCQ. Do you think advances like this will be the norm for future generations of learners – the merging of physical and virtual activities? How might such advance be more effective at evaluating students’ full capabilities?

Prof. GC

The technology changes so quickly that many of the tools we used 5-10 years ago are no longer relevant but the concept has remained the same (Q1). So the approach we took of separating the tool from the question (hypertext link) I think was the correct one. The questions can remain the same (or similar) even if the tool is replaced – especially if the question was based on a key concept or idea for the discipline.

Learnosity

You once wrote that:

“Since teachers may be using different assessment software, the method for incorporating the interactive tool should be applicable to all operating platforms and all common learning or assessment management systems.”Do you think this lack of unity in education technology makes it difficult for educators or test designers to introduce assessments that are more complex or in depth? How important do you think technological interoperability is to solving the problem (if, indeed, one exists)?

Do you think this lack of unity in education technology makes it difficult for educators or test designers to introduce assessments that are more complex or in depth? How important do you think technological interoperability is to solving the problem (if, indeed, one exists)?

Prof. GC

Learnosity is providing innovative and pedagogically sound approaches to assessment. As you would appreciate, I have been working to have solutions that are independent of the specific software used to generate and deliver the assessment. There will always be a tension between vendors offering a specific product, no matter how good it is, and the simple fact that different institutions will choose to operate a wide variety of software packages for their assessment.

There is an urgent need to reset our understanding of the purpose of assessment. Share on XThe major impediment to change in assessment practices is not the need for better software, but the need for teachers to realize that their traditional assessment formats are out of date.

There is an urgent need for a resetting of the conceptual understanding of the purpose of assessment. If we continue to assess in the ways we have done for the last 30 years then we are doing a great disservice to our students and we are not preparing them for the world in which they operate.

Of course, vendors like Learnosity can facilitate this change by offering approaches that are relatively easy for teachers to adopt. So I do appreciate the role of innovative vendors!

Learnosity

It seems that many educators still think about assessment in a summative capacity, neglecting its formative potential (the old instructivist v constructivist division). Looking further ahead. Is it possible that technical advances might enable assessments that are capable of not just testing knowledge, but how that knowledge was constructed in the first place? In other words, to measure exactly how we learn, not just what?

Prof. GC

Again, teachers will need to appreciate that there are different types of assessment. Some label them as diagnostic, formative and summative or assessment for learning and assessment as learning and assessment of learning. Some assessment is designed for current learning and some is designed for future learning (Geoffrey T. Crisp [2012] Integrative assessment: reframing assessment

practice for current and future learning, Assessment & Evaluation in Higher Education, 37:1, 33-43, DOI: 10.1080/02602938.2010.494234).

A contribution vendors could make is to provide scaffolding in their software for the different types of assessment.This would prompt teachers to offer a range of assessments, not only task types, but purpose types. Teachers could be prompted to include a diagnostic assessment at the beginning of new sections, to include formative assessment with feedback during the unit and then a summative one at key points in the learning journey.

This would prompt teachers to offer a range of assessments, not only task types, but purpose types. Teachers could be prompted to include a diagnostic assessment at the beginning of new sections, to include formative assessment with feedback during the unit and then a summative one at key points in the learning journey.