Four suggestions for getting privacy right when developing edtech

As digital interactions increase, so does the flow of learner data—and the risk of its misuse. But edtech can respect and protect learner privacy. Here’s how.

Edtech is, and perhaps always will be, in the privacy spotlight.

In order to bring the benefits of technology to learning and assessment, we need to capture information about the learning process and people’s skills. The more data we capture, the better the analysis we can do and the better the learning or assessment will be.

But with great amounts of data comes great responsibility. And so regulators, politicians, learners and their stakeholders are keen to ensure that privacy is respected.

Three of the most pressing concerns are that:

- Learners, parents and those with their interests at heart need to ensure personal learner data is used only for learning or assessment and not for marketing or commercial purposes.

- With the shocking commonality of data breaches, everyone wants to make sure that private data about learners is not hacked or disclosed.

- Governments and other stakeholders are often keen to ensure that information on their young people isn’t disclosed to foreign governments.

Most edtech companies are highly reputable. However, edtech is expanding rapidly—one report suggests that edtech venture funding in 2021 was three times that prior to the pandemic. Inevitably, there is increasing legislative and regulatory interest in privacy and edtech.

Recent regulatory developments

Let’s start with President Biden’s State of the Union address in March:

“It’s time to strengthen privacy protections, ban targeted advertising to children, demand tech companies stop collecting personal data on our children.”

Although Biden’s main focus was social media companies, there is a natural spillover to edtech. And although his address was just a speech, there is active discussion going on in Washington about new US legislation to protect children.

Let’s move onto California, which is often a leader in privacy among US States. Many privacy laws are being considered at present that could impact edtech. For example, one proposed bill (AB-2486) would set up an Office for the Protection of Children Online to protect the privacy of children using digital media. Another proposed bill (SB-1172) would apply additional privacy protection to proctoring exams in an educational setting. These bills are not yet passed, and may not be passed this year but they reflect legislator concern.

Edtech venture funding in 2021 was three times that prior to the pandemic. Inevitably, there is increasing legislative and regulatory interest in privacy and edtech. Share on XMeanwhile in Europe, regulators are stepping up enforcement of the GDPR privacy law, with over 1,000 GDPR fines recorded to date. Some of these are in the education space—including for inappropriate remote proctoring and various data breaches. A current regulator focus has been on personal data being shared into the US without sufficient supplementary measures to protect it.

Many other countries are also making new legislation to protect personal data. This is going to increase, not decrease.

What can you do about it?

Privacy is not something you do once and don’t have to worry about again; it needs to be a permanent part of your modus operandi. Here are four suggestions for edtech companies to consider.

1. Build privacy into your design and design process

It’s a cliche but it really is true that it’s much more expensive to retrofit privacy later than add it in to start. As part of this, consider pseudonymization. This is when you separate out the name and other identifying information for learners from the data about them and is described more below.

2. Adopt an approach of full transparency with learners and their stakeholders

Document what data you gather, the purposes you collect it for, what you do with it and how long you keep it. Often this is required by law, but if you go beyond the minimum required, do it proactively and communicate it well, it will help build trust with your learners and stakeholders.

3. Your privacy is only as good as that of your suppliers and partners

Many privacy and security failures are down to third parties so make sure that you review the privacy practices of your vendors and put in place good data protection contracts. At Learnosity, we strive to be leaders in assessment privacy and work only with trusted suppliers.

4. Get someone on your team who knows or learns about privacy

Privacy is principle based, and understanding these principles will stand you in good stead in the myriad of product and communication decisions made day to day. The IAPP (International Association of Privacy Professionals) is a good place to learn and get certified. Two of my Learnosity colleagues and I have IAPP certifications and it helps us greatly.

Pseudonymity: Reducing risk by reducing identifiability

At the recent ATP Innovations in Testing conference, I led a session with Marc Weinstein of Caveon on “Pseudonymity, an Answer to Assessment Privacy Concerns?” (the whitepaper we wrote for the session with Jamie Armstrong, Learnosity general counsel, is available for download below).

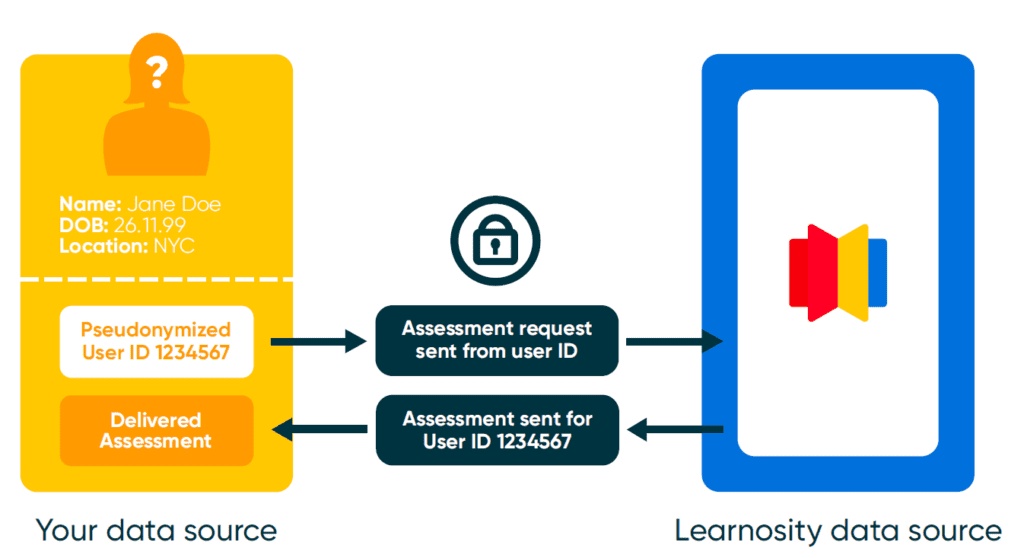

Pseudonymity is a way of storing electronic data where names or other information that identify a person are stored separately from the data about them. When using pseudonymity, learner data is associated with a numeric ID representing the learner rather than with the person’s name. There is a separate index that allows matching the numeric ID to the name, stored separately.

For example, Learnosity customers know who their learners are, but they pass to Learnosity APIs a pseudonymized ID only. In the diagram below, the learner is called Jane Doe, but an ID is generated “1234567”. Learnosity knows only this and not the learner’s name, address, or date of birth. Learnosity’s APIs deliver the assessment and pass the result back to the client, but are never privy to who the learner is.

Pseudonymous data is still personal data, but pseudonymity reduces the number of people or systems with access to real identities and so greatly reduces compliance and security risks.

For more on pseudonymity and how it can keep learners’ personal data safer, download the whitepaper 👇